Te Herenga Waka—Victoria University of Wellington PhD graduate JP Yepez is the latest computer engineer to turn his talents to creating a mechatronic chordophone, otherwise known as a ‘guitar robot’.

Guitar robots can be programmed to play existing music and can also be used to create new compositions in real time.

JP and his supervisors, Professor Dale Carnegie from the School of Engineering and Computer Science and Jim Murphy, senior lecturer from the New Zealand School of Music—Te Kōkī, created two guitar robots. The first, Protochord, is (as the name suggests) a prototype with a single string that they could use to test ideas and develop their final project.

“The University has a strong history in research and creation of guitar and bass robots, so we were able to build on this existing history, which was quite exciting,” JP says.

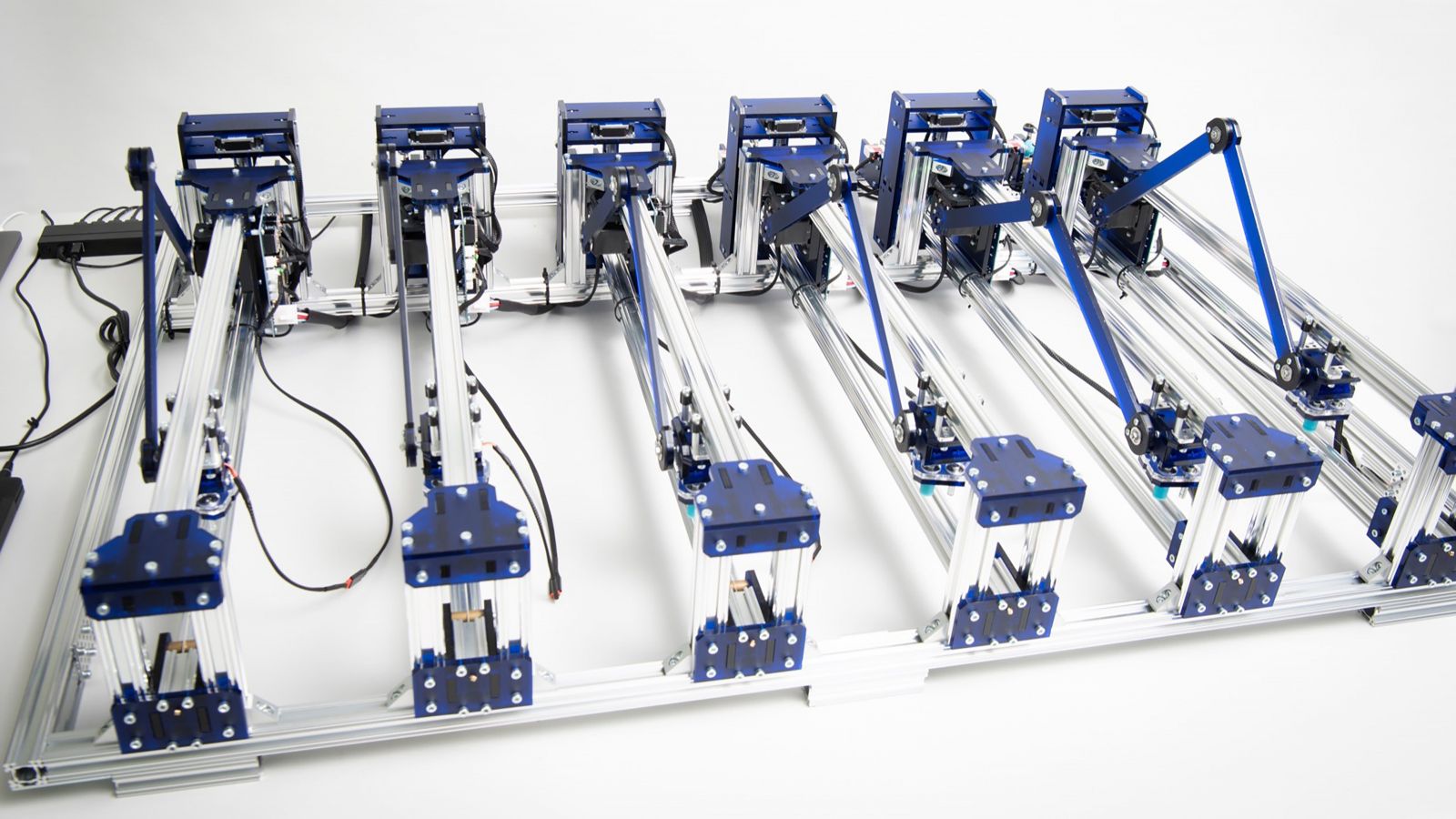

After researching possibilities and testing them on Protochord, JP and his supervisors built their final project, Azure Talos, a six-string chordophone capable of both automated and live music composition.

“We were hoping to make improvements to the design, but also to produce some creative work from our robot—both of which we achieved,” JP says.

The creators of guitar robots face several challenges in making sure the robot functions to begin with, and in designing and building a robot that can perform enjoyable music. Through the creation of Azure Talos, JP and his supervisors were able to address a number of these challenges.

First, Azure has a five-arm picking mechanism to play the strings—a ‘pickwheel’ essentially—that can make 32 to 33 picks-per-second, which is the fastest speed achieved by a guitar robot to date, JP says. For context, the fastest picking speeds achieved by a human guitarist are reportedly 23.5 to 26 picks-per-second.

Azure is also capable of selecting notes accurately and precisely thanks to its robot arm mechanism, which uses a clamping carriage to apply pressure to the string at the desired location.

As well as improving Azure’s playing ability, JP and his supervisors were able to decrease the amount of mechanical noise created by the robot while it played music. This can be a big problem with guitar robots—it doesn’t matter how beautifully the robot plays if no one can hear it over the machine noise produced by the robot as it operates.

After the hard work to address these engineering challenges, it was time to try out the robot.

“I’m very proud of the creative process that was part of our research,” JP says. “Many guitar robots are documented exclusively from an engineering standpoint, but we actually had the opportunity to make music with Azure Talos.”

Azure’s musical repertoire includes such hits as Green Day’s Good Riddance (Time of Your Life), Kansas’ Dust in the Wind, and even Daisy Bell, a song which holds a special place in machine music history as the first to be sung by computer speech synthesis by an IBM computer in 1961.

JP and his supervisors also tested out and developed Azure’s ability to perform algorithmic composition and to compose in real time. They also developed technical exercises and standard repertoire for guitar robots.

“Hopefully, the music we have tested out and the repertoire we have worked on will help other mechatronic artists and engineers get inspired and work more with guitar robots in future,” JP says.

JP is currently working as a web developer in Auckland. COVID-19 lockdowns have put some of his post-PhD plans on hold, but he hopes that 2022 and beyond will give him the opportunity to engage in more academic scholarship as well as creative endeavours with music and with robots.